HTML5 Video Formats: Codecs, Protocols, and Compatibility

Tech Talk

From desktop to mobile to CTV, the number of devices that viewers use to enjoy video is wide-ranging and constantly expanding. For content producers, this means that your raw content must be seamlessly converted into various HTML5 video formats.

While HTML5 eliminated the need for additional plugins, the broad range of devices still require different HTML video formats to maximize playability, quality, and security. Before exploring the specific HTML5 video types and which are best for different scenarios, let’s take a closer look at how video is converted into these formats.

Table of Contents

What Is Video Encoding?

Simply put, video encoding is how the raw video data from cameras is converted into a digital format.

However, this act of compressing and decompressing doesn’t necessarily make the content available on all devices. Transcoding, though often confused with encoding, converts the compressed video from one format to another (e.g., from .mov to .mp4).

The first step uses a codec (short for coder/decoder) to compress large video files into a more manageable size for both transmission and storage. When ready for playback, the codec will decompress the file, allowing it to be viewed in its original or near-original quality. This compression and decompression process optimizes the video files for efficient storage and minimum bandwidth usage.

But what exactly is a codec, and how does it differ from a container?

Codecs vs Container Formats

- Codecs. Codecs are the algorithms or software used to compress and decompress the raw data. Popular HTML5 video codecs include H.264, and audio codecs include AAC, MP3, and Opus.

- Containers. Containers are the HTML5 file formats that assemble the encoded video and audio into a single file. These files also include metadata and other important video-specific information like closed captioning and chapter markers.

Video Codecs

Each codec offers unique benefits and trade-offs in terms of compression efficiency, video quality, and compatibility with different devices and platforms. You’ll need to familiarize yourself with the most common types to understand which one(s) will work best for your specific needs.

- H.264. Also known as Advanced Video Coding (AVC), this widely used codec produces high quality video in small file sizes, and it’s compatible with most devices and platforms.

- H.265. High Efficiency Video Coding (HEVC) is another common codec ideal for high quality videos, like 4K, since it uses about half the bitrate of AVC. In fact, the “high efficiency” in HVEC refers to its compression capabilities.

- H.266 and open-source codecs. H.266, or Versatile Video Coding (VVC), is one of the newest compression standards and can offer up to 50% more efficiency than HEVC. This makes it ideal for 4K streaming as well as 8K and beyond. Open-source codecs, like Google’s VP9 and the Alliance for Open Media’s AV1, are also pushing compression efficiency even further. However, like VVC and other new codes, adoption is still nascent and compatibility is relatively limited.

Codec Compatibility with Browsers and Devices

Browser and device compatibility varies widely across video codecs. While AVC enjoys the broadest support across nearly all common systems, VVC is so new, it lacks any hardware support at all. HEVC also has broad support on most devices, but it still struggles with mobile browsers. And both VP9 and AV1 have made strong strides in the market, but they both still lack support on Apple browsers and devices.

Desktop Browsing Support

| Codec | Google Chrome | Mozilla Firefox | Microsoft Edge | Safari | Opera |

|---|---|---|---|---|---|

| H.264 (AVC) | ✓ | ✓ | ✓ | ✓ | ✓ |

| H.265 (HEVC) | ✓ | ✗ | ✓ (Windows 10+ with hardware support) | ✓ (macOS High Sierra+) | ✗ |

| H.266 (VVC) | ✗ | ✗ | ✗ | ✗ | ✗ |

| VP9 | ✓ | ✓ | ✓ | ✗ | ✓ |

| AV1 | ✓ | ✓ | ✓ | ✗ | ✓ |

Mobile Browsing Support

| Codec | Chrome (Android) | Firefox (Android) | Safari (iOS) | Edge (Android / iOS) | Opera (Android / iOS) |

|---|---|---|---|---|---|

| H.264 (AVC) | ✓ | ✓ | ✓ | ✓ | ✓ |

| H.265 (HEVC) | ✗ | ✗ | ✓ (iOS 11+) | ✗ | ✗ |

| H.266 (VVC) | ✗ | ✗ | ✗ | ✗ | ✗ |

| VP9 | ✓ | ✓ | ✗ | ✓ | ✓ |

| AV1 | ✓ | ✓ | ✗ | ✓ | ✓ |

Mobile Device Support

| Codec | Android Devices | iOS Devices | Samsung Devices | Google Pixel | Huawei Devices |

|---|---|---|---|---|---|

| H.264 (AVC) | ✓ | ✓ | ✓ | ✓ | ✓ |

| H.265 (HEVC) | ✓ (limited) | ✓ (iOS 11+) | ✓ (limited) | ✓ | ✓ (limited) |

| H.266 (VVC) | ✗ | ✗ | ✗ | ✗ | ✗ |

| VP9 | ✓ | ✗ | ✓ | ✓ | ✓ |

| AV1 | ✓ | ✗ | ✓ | ✓ | ✓ |

Smart TV Support

| Codec | Samsung Smart TVs | LG Smart TVs | Sony Smart TVs | Roku TVs | Apple TV |

|---|---|---|---|---|---|

| H.264 (AVC) | ✓ | ✓ | ✓ | ✓ | ✓ |

| H.265 (HEVC) | ✓ | ✓ | ✓ | ✓ | ✓ |

| H.266 (VVC) | ✗ | ✗ | ✗ | ✗ | ✗ |

| VP9 | ✓ | ✓ | ✓ | ✓ | ✗ |

| AV1 | ✓ (newer models) | ✓ (newer models) | ✓ (newer models) | ✓ | ✗ |

Encoding Considerations

- Quality (4K+ support). For content producers aiming to deliver HD and UHD (4K and beyond) videos, the choice of codec should be a top consideration. H.265 (HEVC) and H.266 (VVC) provide the best compression efficiencies for maintaining high video quality at lower bitrates. Open-source codecs like AV1 are also great for high quality videos like 4K; just be sure that the devices and platforms you’re targeting support them.

- Latency. Latency is the amount of time that passes before a video starts playing. For example, if you’re employing live streaming or interactive video, lower latency is crucial since even small delays can significantly impact the viewer experience. H.264 (AVC) is well known for its low latency and as such is often used for real-time applications. Newer HTML5 codecs like AV1 may provide better compression, but their complex encoding processes may result in higher latency.

- Licensing. You’ll also want to consider the cost of licensing when deciding which codec is best for you. Proprietary codecs like H.265 (HEVC) and H.266 (VVC) require licensing fees, which can quickly add up when distributing at scale.

What Is Video Packaging?

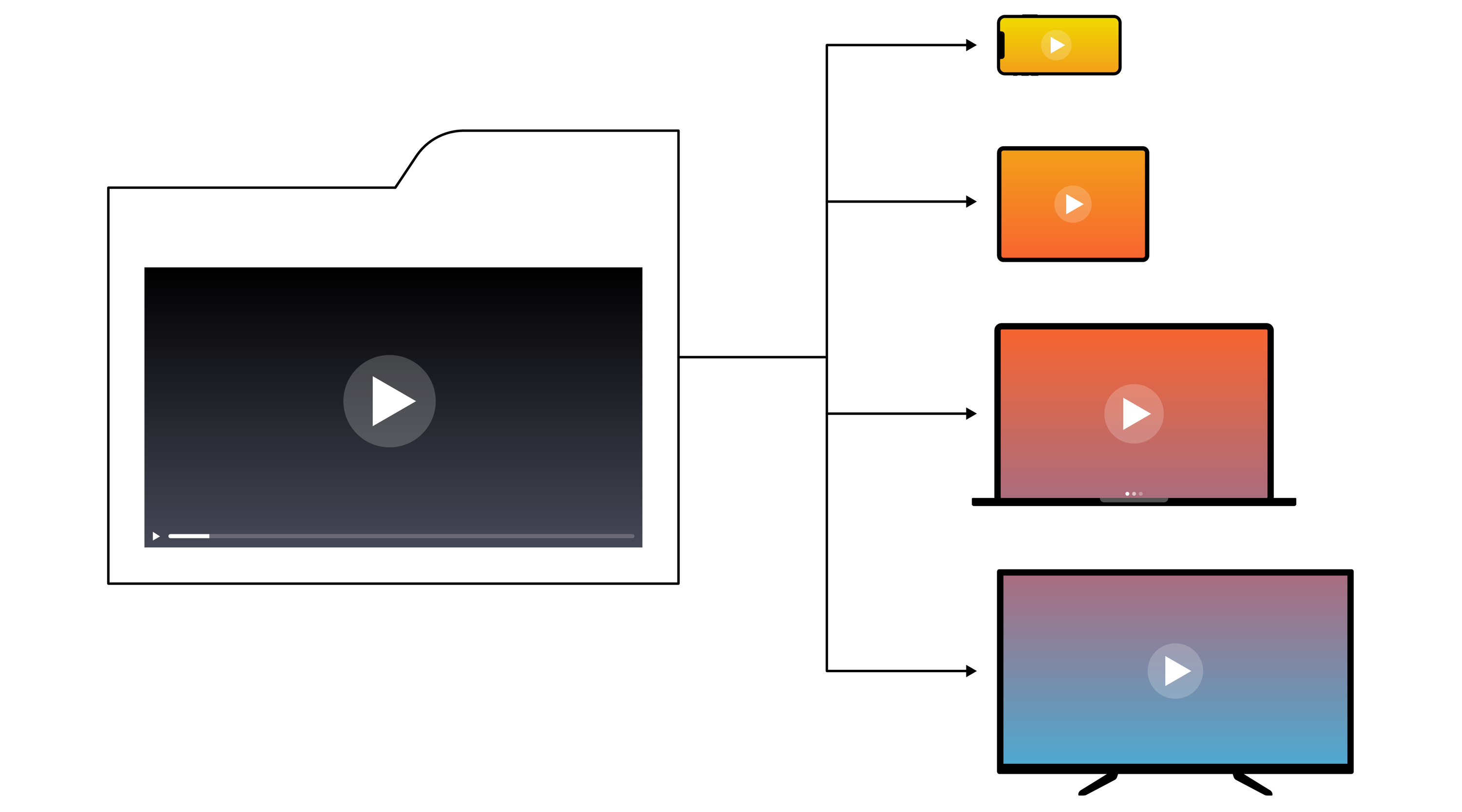

Once the codecs compress and decompress the video data, packaging is the key to preparing the data to be delivered to the viewer.

Video packaging is the process that combines all of the necessary elements for a quality viewing experience. This includes encoded video and audio files, manifests that synchronize the files, metadata, and subtitles.

Here’s a brief look at how it works:

- Encoding. First, the raw video and audio data are encoded using codecs, and the file sizes are compressed for easier storage and transmission.

- Multiplexing (muxing). The encoded video and audio streams are then combined into a container file and organized for seamless playback. Examples include MP4, MKV, and WebM.

- Property selection. The producer selects key properties for the HTML5 video format, including bitrate, frame rate, and resolution.

- Adding metadata. Metadata like title, duration, chapter markers, and subtitles are added to the container.

While they share some similarities, it’s important to note the distinctions between packaging and transmuxing. A key difference is that packaging creates new containers, while transmuxing converts the container from one format to another without changing the original audio, video, and metadata content. For example, you might transmux a video from an MP4 container to a WebM container to support its compatibility with different devices and platforms.

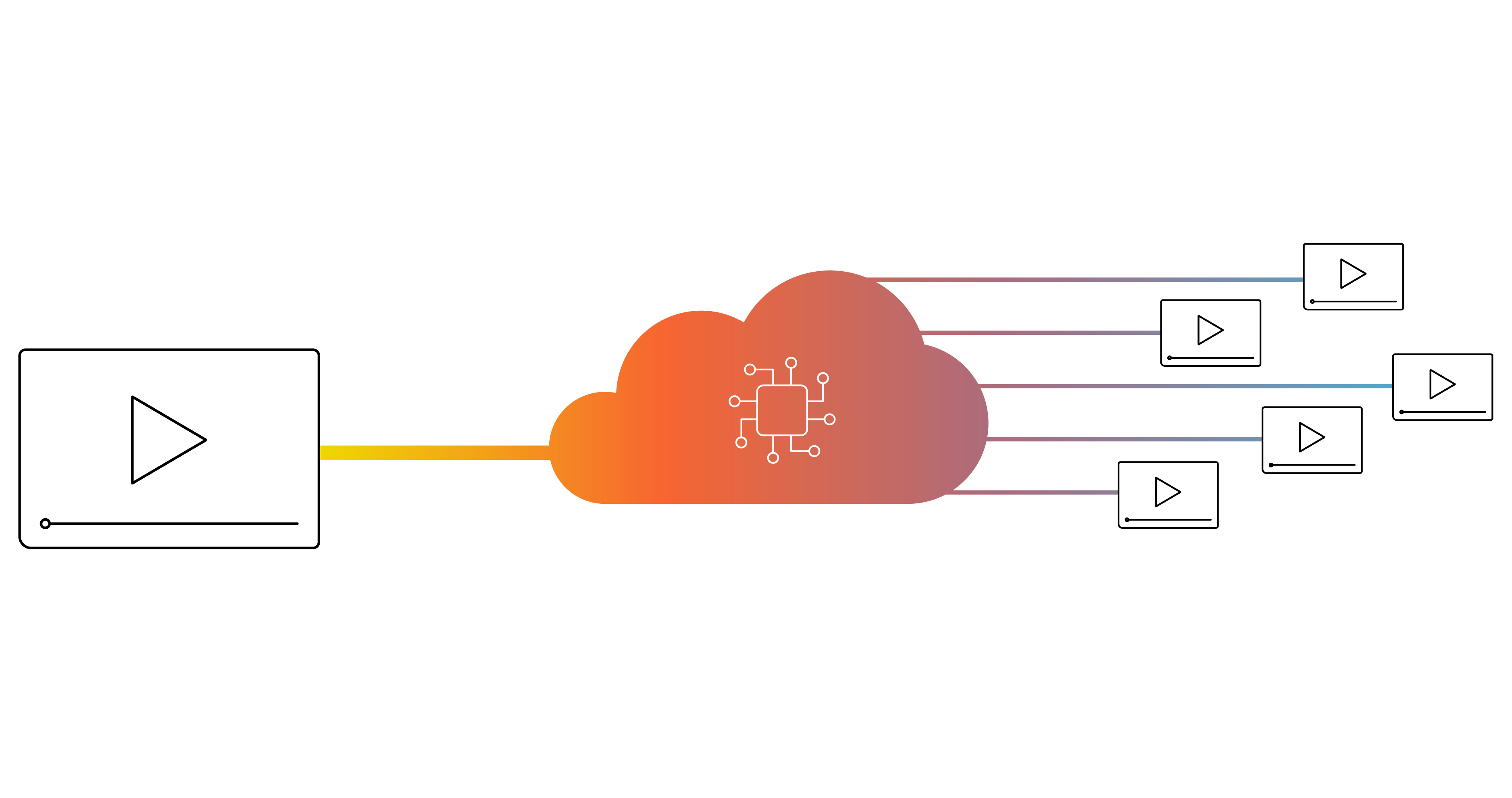

HTTP vs Other Protocols

Once the file is packaged, the choice of protocol to deliver it will impact performance and compatibility. Here’s what you ‘ll need to know about HTTP and how it compares to other delivery protocols.

- HTTP. HTTP (Hypertext Transfer Protocol) is the standard protocol used across the internet. Because of this, HTTP-based streaming formats are able to leverage ubiquitous browser support and the massive, scaled infrastructure already in place for the web. HTML5 video formats such as HLS (HTTP Live Streaming) and DASH (Dynamic Adaptive Streaming over HTTP) are ideal for video streaming due to their ability to reach large audiences efficiently. However, HTTP may incur higher latency and can face challenges with maintaining consistent quality and reliability depending on the viewer’s network conditions.

- WebRTC / SRT / RIST. Other protocols like WebRTC (Web Real-Time Communication), SRT (Secure Reliable Transport), and RIST (Reliable Internet Stream Transport) offer greater control over latency, quality, and reliability. These protocols are better suited for real-time applications like video conferencing, live video production, contribution streams, and online gaming. They often deliver lower latency and more consistent quality, but may be problematic or costly to scale to reach large audiences. These factors may make them more ideal for smaller or limited audiences, but perhaps less suitable for widespread video streaming compared to HTTP-based solutions.

Streaming Protocols

Streaming protocols are HTTP-based and designed specifically for delivering video and audio content in real time. They allow for adaptive playback that adjusts to the viewer’s network conditions to reduce buffering and deliver an overall smoother viewing experience. There are two primary streaming protocols, as well as one intermediate format.

- HLS. Apple’s HLS is one of the most widely used streaming protocols. It breaks down video content into small, HTTP-based file segments that are downloaded and played in real time. It’s a particularly adaptive protocol, which makes it more effective at reducing buffering, and is compatible with a wide range of devices.

- MPEG-DASH. DASH, an open standard developed by the Moving Picture Experts Group (MPEG), is similar to HLS in that it segments the video content. Because the segments are encoded at different qualities, it can switch quality level even in the middle of a video to align with current network conditions. It’s highly flexible since it can support a variety of codecs and is also widely supported across browsers and devices.

- CMAF. Common Media Application Format (CMAF), while not technically a protocol, is an intermediate format designed to work with HLS and DASH. Developed by Apple and Microsoft, their goal was to create a standard that would help reduce the complexity, cost, and storage of delivering online video. So instead of needing to create, and store, separate files for MP4, .mov, and others, CMAF uses a single set of video files for both HLS and DASH streaming.

Codec Compatibility with Streaming Protocols

- H.264 (AVC). AVC is natively supported on HLS, DASH, and nearly all streaming protocols. Because of its high compression efficiency and widespread compatibility, viewers generally experience better playback and less buffering.

- H.265 (HEVC). HEVC is supported by HLS, particularly on Apple devices running iOS 11 and later. DASH is also compatible with HEVC but requires compatible playback software or devices.

- Open-source codecs. VP9 is compatible with DASH and compatible with major browsers. However, DASH and HLS do not natively support AV1.

Packaging Considerations

Security

Preventing unauthorized playback is a key consideration for many media brands. However, implementing Digital Rights Management (DRM) can be complex due to variations in HLS/DASH package formats. To help streamline DRM implementation, the industry has developed a standard encryption model called Common Encryption (CENC).

CENC works with the CMAF format, allowing content producers to encode and encrypt media once, then apply different DRM providers to decrypt as needed. To achieve this, the HTML5 video tag supports what’s called an Encrypted Media Extension (EME). EME works with CENC to standardize how encrypted video content is handled. Essentially, you encrypt your video using a 128-bit or 256-bit CENC key. To decrypt and play the video, you can leverage DRM systems like Google Widevine, Apple FairPlay, and Microsoft PlayReady. These DRM providers manage the keys necessary to unlock the encrypted content and ensure that it can only be accessed by authorized users.

Although CENC was intended to provide a universal method for encrypting media, in practice, you may still need to handle HLS and CMAF/DASH content separately due to different implementations of CENC.

Monetization (Ad Insertion)

Modern HTTP streaming standards make it much easier to monetize video content through ad insertion. By creating instructions for the video player, known as manifests, they can specify video segments called chunks. These chunks can be created through explicit media files (e.g., segment1.ts, segment2.ts, segment3.ts) or by leveraging byte-range requests (e.g., mediaFile.mp4 bytes 0 to 1000). Each of these manifests carry ad signaling information about when and where ads should be inserted. This functionality for monetization continues to grow, including Apple’s 2024 announcement of support for the Interstitial attribute in HLS.

In addition to specifying the ad breaks, the manifest can also be leveraged to point the player at alternative content for better personalization. Through “virtual manifests,” each viewer or group of viewers is connected to a personalized manifest where specific ads can be stitched into the manifest in real time based on who’s watching. This is the core of our Server-Side Ad Insertion (SSAI) solution, which ensures that each viewer sees targeted ads tailored to their preferences and demographics.

Another use case for manifest manipulation is offering viewers alternative programming. For example, a broadcaster like CBS might have a national feed airing a football game. All viewers connected to this feed will see the game—unless they are in a specific market, such as Cleveland. In Cleveland, the manifest instructions can point the player to a secondary feed showing alternative content during the game. This process, known as “blackout” or “alternative” programming, ensures compliance with regional broadcast rights and provides a tailored viewing experience for different audiences.

It should be noted that neither HLS nor DASH are more effective at ad insertion than the other. Both enable it in the same way through manifests, and most brands will use both to improve device compatibility. That said, the underlying codecs and encoding configurations of your content and ads should match up to provide the best viewing experience. Mismatched content and ads can result in playback and quality issues. Using an SSAI solution like ours can avoid this, as it conditions the ads with the same ingest profile that creates the content.

Accessibility

Another key consideration is accessibility, including multiple language tracks and subtitles. Fortunately, this process can be efficiently managed through the use of manifests, which means both HLS and DASH are equally competent. To achieve this, localized variants are created at runtime and specified within the manifest. The manifest then points to these variants and their associated ancillary streams, including video renditions, audio renditions, and caption/subtitle renditions.

For example, our partner, SyncWords, ingests our live stream and produces secondary audio tracks (translated and dubbed in alternative languages) and subtitle/caption tracks. Initially, the original encoded live stream contains only the primary assets, such as:

- Master Manifest

- Video Variant 720p

- Video Variant 1080p

- Audio Variant - English

- Caption Variant - English

SyncWords takes the master manifest, extracts one of the audio tracks and/or the caption track, and performs real-time translation. This process produces multi-language captions and can apply AI dubbing to generate alternative audio tracks with the speaker’s language adjusted to other desired languages. It’s a clear example of how technology continues to make both producing and consuming content more efficient, inclusive, and accessible.

History of Video Streaming

Knowing the HTML5 video formats that are best for your specific use case requires an understanding of the video encoding and packaging processes. But if you want to prepare your current operations for the future, it’s worth reviewing the history of streaming technology. Several innovations have come and gone, so it’s worth noting which ones remain and continue to adapt.

Progressive Download and Early Streaming

In the early days of web video, media players like Windows Media, QuickTime, and RealPlayer were the primary platforms for viewing. They used progressive download technology, where files were downloaded in a linear method but the video would begin playing before the complete file was transferred. While innovative for its time, this method often led to buffering issues and required proprietary software for playback.

Flash

With Adobe Flash, viewing across the web was simplified with a common framework that was much more widely available across different browsers. Although videos saw less buffering and overall better performance, it relied on a heavy, inefficient browser plugin that in some cases presented significant security risks.

HLS

The introduction of the Apple iPad significantly changed the entire video streaming landscape. Citing security and battery usage issues, Apple stopped supporting Flash. Around the same time, HTML5 was released and included a native HTML5 video tag that allowed browsers to natively support video playback. Leveraging this technology, Apple introduced HLS and used HTTP manifests to instruct the player on how to use the HTTP protocol to request stream segments. This innovation built upon existing web infrastructure to enable scalable video distribution through standard HTTP requests and HTML5 video tags.

MPEG-DASH

Following Apple’s lead, the MPEG consortium introduced a non-Apple standard called MPEG-DASH (Dynamic Adaptive Streaming over HTTP). Like HLS, MPEG-DASH used segment-based streaming and HTTP protocols. However, being supported by a neutral standards body, MPEG-DASH saw much wider adoption from many other device manufacturers.

CMAF

CMAF was introduced as an open standard that allowed content to be prepared as a base media format compatible with both MPEG-DASH and HLS manifest formats, which eliminates the need to create separate media assets for each format, streamlining the content preparation process and improving efficiency.

Complications and Innovations

Despite these advancements, HTML5 video streaming remains a complex field. Challenges like ultra-low latency, blackout rules, HDR metadata, and others have required a host of various extensions for MPEG-DASH, HLS, and other HTML5-supported video formats. That said, these standards continue to evolve rapidly. For instance, HLS received several new extensions to enhance ad delivery and support for HDR declarations during the Apple 2024 Worldwide Developer Conference (WWDC).

As history shows, there will continue to be innovations in existing technology as HTML5 video formats and streaming protocols evolve. Having a foundational understanding of the process, software, and tools will help you more quickly adapt to the developments while ensuring the best possible viewing experience.